Haskell Showroom: How to switch between multiple kubernetes clusters and namespaces

A while ago I decided that I was done writing anything in bash. I just won’t do it anymore! Instead I’ve started writing everything, even the smallest of tools, in Haskell.

I just don’t think it’s possible to write good and maintainable software with bash, no matter how simple the tool might be. In my opinion the main benefit with bash is that it’s so easy to distribute to end users. There’s no special installation or configuration, you just download a script and run it. With haskell I’m aiming towards distributing statically linked binaries (although in simple cases dynamically linked binaries work just fine as well).1

This series of posts titled “Haskell Showroom” are my attempt at showcasing what Haskell can be used for. It’s an attempt to answer the question I get asked a lot:

"What is Haskell a good fit for?"

Haskell has a very good reputation when it comes to writing compilers but it’s a general purpose programming language and it really can be used for all sorts of things. I mainly use it for writing web apps and CLI tools.

In the first post in this series I will talk about my tool called denv, which is a tool to help me “manage environments”. While it helps me manage (and switch between) various environments, in this post I will focus on how it helps with switching between multiple kubernetes clusters in a sane and simple way.

The problem

I work with a bunch of kubernetes clusters. Some at work for various clients, and some for personal projects. It’s sometimes hard and confusing to switch between these clusters (and namepsaces within a cluster) while confidently knowing which cluster (and namespace) you’re currently working on.

The “default” way kube does this is by having all the configurations for all the clusters

written into one single yaml file: ~/.kube/config.

Then you have to use a combination of kubectl config set-context and kubectl config use-context which will in turn mutate the global config file, changing the current-context field.

This, to me, feels clumsy and does not address the part where we confidently know which cluster (and namespace) we’re

working on.2

There are tools that try to address this, such as: kubectx, kubens and kube-ps1. However from what I can tell they all fall short of a couple of things:

- They still rely on a single global config file that they mutate using

kubectlbehind the scenes.

There’s something about “mutating global state” that just does not sit well with me. 3 Apart from that it’s a hard requirement for me to be able to separate different projects I’m working on.

- All of these appear to be written in bash

I think the main reason tools like this get written in bash is because it’s easy to inject environment variables in the currently running shell. If you write a custom tool it is going to run as a child process of your shell and so it’s not possible for a child process to change environment variables for the parent process (your shell). Later in the post I’ll show a way how to work around this.

The proposed solution

First things first, let’s get rid of the global config file:

# Don't remove the old file. Back it up just in case you need to extract

# auth info for each cluster and break it up into separate files

mv ~/.kube/config ~/.kube/config.old

touch ~/.kube/config

chmod 400 ~/.kube/config`This should prevent any tools from the k8s ecosystem from modifying that file.

However, since we can’t use the default file we have to set the KUBECONFIG environment variable to something

like ~/.kube/customer1-prod.yaml. All of the tooling from the k8s ecosystems (like kubectl, kops, helm etc) respects

this environment variable.

This is especially useful when creating a cluster with kops, given that it saves a configuration file for you once the cluster is created,

and so it will write it to a new file rather than mutate the one single file.

So, it’s crucial to use export KUBECONFIG=~/.kube/customer1-prod.yaml before running any kubectl (or similar)

commands. This will make sure that we isolate each cluster in it’s own config file.

There’s a problem though. This seems tedious and does not show us which cluster we’re currently working on in our terminal.

Also, we have to prepend --namespace=<NAMESPACE> to each kubectl command.

Using “denv”

Let’s install denv.

Add the following alias to your .bashrc or .zshrc:

alias k='kubectl --namespace=${KUBECTL_NAMESPACE:-default}'Add this hook at the end:

eval "$(denv hook ZSH)"or eval "$(denv hook BASH)" for bash.

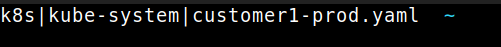

Run: denv kube -p ~/.kube/customer1-prod.yaml -n kube-system to activate the customer1 cluster within the

kube-system namespace. Observe how we are told in the prompt which cluster and namespace we’re working on.

You may be thinking how the same thing can probably be done with an .envrc file and direnv that just auto exports the above variables.

While I have a lot of respect for direnv, and I still find inspiration for a lot of stuff from their repo, I have 2 issues with it:

I don’t like the fact that changing to a different directory will automatically inject things into my environment and automagically change my configuration for who knows what. I much prefer the semantics of the

workoncommand from Python’s virtualenvwrapper where you have to explicitly activate and deactivate an environment before anything is changed in your running session. And it works independent of which directory you’re currently in. 4It requires that I change my current working directory to a specific directory where the

.envrcfile is located. This does not work well with some of my workflows (it would require duplicating the .envrc file in a couple of places).

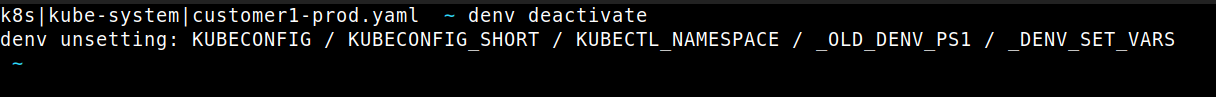

What denv kube brings to the table is a way that forces you to activate a certain cluster/namespace before being able

to do anything with it. It forces you to deactivate that specific cluster if you’re done working with it. This

will make sure to unset the above environment variables so there is no way that you have a long running terminal

somewhere that has access to a cluster you’re not aware of.

How it works

Let’s define our cluster (I call it project) and namespace that we will parse from the -p and -n flags

respectively:

type KubeProjectName = String

type KubeNamespace = StringWe also need a way to define what the list of kube specific environment variables we’re dealing with:

data KubeVariable

= KubeConfig

| KubeConfigShort

| KubectlNamespace

deriving (Eq)We will provide a Show typeclass instance so that we can convert these to a String later on.

instance Show KubeVariable where

show KubeConfig = "KUBECONFIG"

show KubeConfigShort = "KUBECONFIG_SHORT"

show KubectlNamespace = "KUBECTL_NAMESPACE"Apart from kube specific variables we have some special variables that we will track:

data SpecialVariable

= Prompt

| OldPrompt

| DenvSetVars

deriving (Eq)

instance Show SpecialVariable where

show Prompt = "PS1"

show OldPrompt = "_OLD_DENV_PS1"

show DenvSetVars = "_DENV_SET_VARS"Great, now that we have all these types we need a way to tell our program if we want to Set or Unset a given

variable. This is important since we also want to be able to deactivate an environment, that is to say, unset all of the environment

variables that we’ve injected into our shell session.

data DenvVariable where

Set :: (Eq a, Show a, Typeable a) => a -> T.Text -> DenvVariable

Unset :: (Eq a, Show a, Typeable a) => a -> DenvVariableLet’s go ahead and create our environment.

We’ll use the mkKubeEnv function, which will take the project name, namespace

and create the environment:

mkKubeEnv :: KubeProjectName -> Maybe KubeNamespace -> IO ()

mkKubeEnv p n = do

checkEnv

exists <- doesFileExist p

unless exists (die $ "ERROR: Kubeconfig does not exist: " ++ p)

let p' = takeFileName p

let n' = fromMaybe "default" n

let env =

withVarTracking

Nothing

[ Set KubeConfig $ T.pack p

, Set KubeConfigShort $ T.pack p'

, Set KubectlNamespace $ T.pack n'

, Set OldPrompt ps1

, Set Prompt $

mkEscapedText "k8s|$KUBECTL_NAMESPACE|$KUBECONFIG_SHORT $PS1"

]

writeRc envwriteRc is a function with the type signature writeRc :: [DenvVariable] -> IO () and it does 2 things:

- It converts

"Set KubeConfig "~/.kube/customer1-prod.yaml"toexport KUBECONFIG=~/.kube/customer1-prod.yamlorUnset KubeConfgtounset KUBECONFIG. - Writes all of these environment variables to

~/.denv.

Once the ~/.denv file is in place the eval "$(denv hook BASH)" that we put in our .bashrc will check for it and

inject the environment variables into the current shell session.

This is actually a very ingenious way of injecting variables from a child process into the parent process (denv -> shell).

All credit for the idea goes to direnv authors.

If you’re interested in the details please refer to the source code in the github repo.

Summary

In this post I’ve showed you how I go about switching between multiple kubernetes clusters while averting potential disasters and confusions about which cluster I’m currently working on.

I used Haskell to solve this particular problem because Haskell allows me to easily extend this tool with different functionality, furiously refactor without fear and keep me from shooting myself in the foot by not treating everything as a String 5.

This code is far from perfect and could definitely use more type safety but hopefully I was able to demonstrate how Haskell can be not scary (no fancy type gymnastics) and very much usable in an “imperative” way if so required to get the job done (and move on).

In the second post in this series I will talk about denv aws and how to switch between multiple AWS accounts, use

temporary credentials, multi factor authentication and key rotation.

While static compilation can be a bit of a chore with Haskell, if you don’t have any special C dependencies even dynamically linked binaries will most likely work on various linux distros (since most of them have the necessary

lib*-devpackages installed already).↩︎I’ve observed this to be a very big stumbling block for folks starting out with kubernetes.↩︎

One of the main reasons why I like Haskell and Functional Programming in general; It eliminates all sorts of bugs with regards to global mutable state.↩︎

One of the main reasons why I like Haskell and Functional Programming in general; It eliminates all sorts of bugs with regards to global mutable state.↩︎

I can’t count the number of times where I debugged a typo in Bash or Python.↩︎

Did you like this post?

If your organization needs help with implementing modern DevOps practices, scaling your infrastructure and engineer productivity—I can help! I offer a variety of services.

Get in touch